Another quick trick when using the Esri Javascript API. If you run into a scenario where the service you are accessing has min/max scales applied, but you need the data outside that scale range, here is a trick that can help out.

Before we get to the how, there are a few things you should know about this trick:

Data Holes: When Max Record Count Bites Back

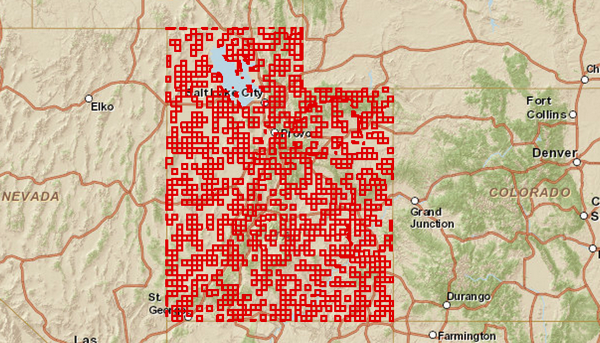

Even with the gridded queries that dynamic mode feature layers use, at small scales (zoomed way out) it is common that you will be requesting more than the max record count number of features. When this happens, you get “holes” in the data returned, as shown below.

The default for max record count is usually 1000 features, but I think the latest release bumps this up to 2000. Regardless of the default, this value can be changed as part of the server configuration, so unless you control the server, it’s not something you can change.

Performance May Suffer

Scale ranges are commonly used to avoid sending very detailed or dense data over the wire. So, even if the layer you are working with only has a few dozen features, if they are really detailed geometries, things may get really slow, so perhaps reconsider.

How To

It’s actually really easy. Create a feature layer, then in it’s “load” event, reset the min/max scale properties. Then add it to the map.

Here is a link to a JSBIN you can play with.

The map below shows a feature layer from a demo server that has a minScale of 100,000 shown on a map that is at 1:36,978,595

[Another quick trick when using the Esri Javascript API. If you run into a scenario where the service you are accessing has min/max scales applied, but you need the data outside that scale range, here is a trick that can help out.

Before we get to the how, there are a few things you should know about this trick:

Data Holes: When Max Record Count Bites Back

Even with the gridded queries that dynamic mode feature layers use, at small scales (zoomed way out) it is common that you will be requesting more than the max record count number of features. When this happens, you get “holes” in the data returned, as shown below.

The default for max record count is usually 1000 features, but I think the latest release bumps this up to 2000. Regardless of the default, this value can be changed as part of the server configuration, so unless you control the server, it’s not something you can change.

Performance May Suffer

Scale ranges are commonly used to avoid sending very detailed or dense data over the wire. So, even if the layer you are working with only has a few dozen features, if they are really detailed geometries, things may get really slow, so perhaps reconsider.

How To

It’s actually really easy. Create a feature layer, then in it’s “load” event, reset the min/max scale properties. Then add it to the map.

Here is a link to a JSBIN you can play with.

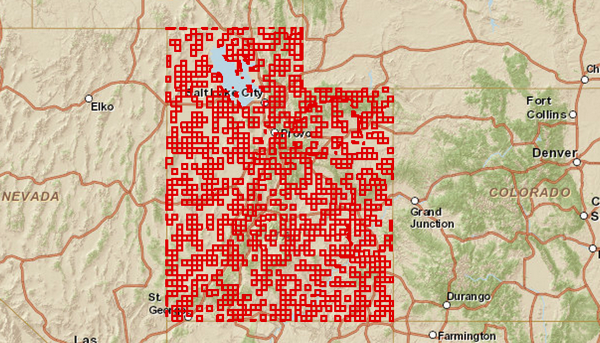

The map below shows a feature layer from a demo server that has a minScale of 100,000 shown on a map that is at 1:36,978,595

](http://jsbin.com/EXEwADO/6/embed?output){.jsbin-embed}

And of course you can use the same technique with MapService layers. Shown below is a layer that has a maxScale of 1,000,000 and we are zoomed in well past that.

MapService as FeatureService{.jsbin-embed}